Introduction to Text Preprocessing

Understanding the terms and their relationships

Text analysis is about digging into written words to find meaning, spotting patterns, tracking sentiment, or identifying key topics and themes. It’s essentially the “what does this text tell us?” part of the process. Text analysis turns unstructured words into structured insights. Every day, countless emails, news articles, social media content, scientific literature, and reports are produced, hiding patterns, opinions, and signals that numbers alone can’t capture. By applying computational text analysis, we can uncover trends, measure public sentiment, compare perspectives, and extract meaning from massive collections of information that would otherwise feel overwhelming.

Text preprocessing is the set of steps used to clean, standardize, and structure raw text before it can be meaningfully analyzed. This may include removing punctuation, normalizing letter case, eliminating stop words, breaking text into tokens (words or sentences), and reducing words to their base forms through lemmatization. Preprocessing reduces noise and inconsistencies in the text, making it ready for computational analysis.

Natural Language Processing (NLP) provides the computational methods that make text analysis possible. It is a branch of artificial intelligence that enables computers to understand, interpret, and generate human language. NLP techniques include part-of-speech tagging, named entity recognition, sentiment detection, topic modeling, and transforming words into numerical representations for analysis.

These three elements are co-dependent and work together. Preprocessing prepares messy text for analysis, NLP provides the computational tools to process and interpret the text, and text analysis applies these insights to answer meaningful questions. Without preprocessing, NLP models struggle with noise; without NLP, text analysis would be limited to surface-level counts; and without text analysis, NLP would have little purpose beyond technical processing. Together, they transform raw text into structured, actionable insights.From messy to analysis-ready text

Of course, text isn’t quite as “analysis-ready” as numbers. Have you ever looked at raw text data and thought, where do I even start? That’s the challenge: before computers can process it meaningfully, text usually needs some cleaning and preparation. Before text can be analyzed computationally, it needs to be standardized. Computers see “Happy,” “happy,” and “HAPPY!!!” as different words — preprocessing fixes that.

It’s extra work, but it’s also the foundation of any meaningful analysis. The exciting part is what happens next; once the text is shaped and structured, it can reveal insights you’d never notice just by skimming. And here’s the real advantage: computers can process enormous amounts of text not only faster but often more effectively than humans, allowing us to see patterns and connections that would otherwise stay hidden.

Garbage in, Garbage out

You’ve probably heard the phrase “garbage in, garbage out”, right? It’s a core principle in computing: the quality of the output heavily depends on the quality of the input.

This concept holds especially true text analysis tasks because human language is naturally messy, inconsistent, and often ambiguous.

Key text preprocessing steps include normalization (noise reduction), stop words removal, tokenization and lemmatization, which are depicted and explained in the handout below:

Source: Data Literacy Series https://perma.cc/L8U5-ZEXD

In the next chapters, we’ll dive deeper into this pipeline to prepare the data further analysis, but before, let’s take a quick look at the data so we can have a better grasp of the challenge at hand.

Getting Things Started

The data we pulled for this exercise comes from real social media posts, meaning they are inherently messy, and we know that even before going in. Because it is derived from natural language, this kind of data is unstructured, often filled with inconsistencies and irregularities.

Before we can apply any meaningful analysis or modeling, it’s crucial to visually inspect the data to get a sense of what we’re working with. Eyeballing the raw text helps us identify common patterns, potential noise, and areas that will require careful preprocessing to ensure the downstream tasks are effective and reliable.

Getting Files and Launching RStudio

Time to launch RStudio and our example! Click on this link to download the text-preprocessing subfolder, from the folder text-analysis-series. Among other files, this subfolder contains the dataset we will be using comments.csv, a worksheet in qmd, a Quarto extension (learn more about Quarto), named preprocessing-workbook.qmd where we will be performing some coding, and an renv.lock(learn more about Renv) file listing all the R packages (and their versions) we’ll use during the workshop.

This setup ensures a self-contained environment, so you can run everything needed for the session without installing or changing any packages that might affect your other R projects.

After downloading this subfolder, double click on the project file text-preprocessing.Rproj to launch Rstudio. Look for and open the file preprocessing-workbook.qmd on your Rstudio environment.

Setting up the environment with renv

Next, we will need to install the package `renv` so you can setup the working environment correctly with all the packages and dependencies we will need. On the console, type:

install.packages("renv")Then, still in the console, we will restore it, which will essentially installs packages in an R project to match the versions recorded in the project’s renv.lock file we have shared with you.

renv::restore()Matrix Package Incompatible with R

If you encounter incompatibility issues with the Matrix package (or any other) due to your R version, you can explicitly install the package by running the following in your console:

renv::install("Matrix")Next, update your renv.lock file to reflect this version by running:

renv::snapshot()Loading Packages & Inspecting the Data

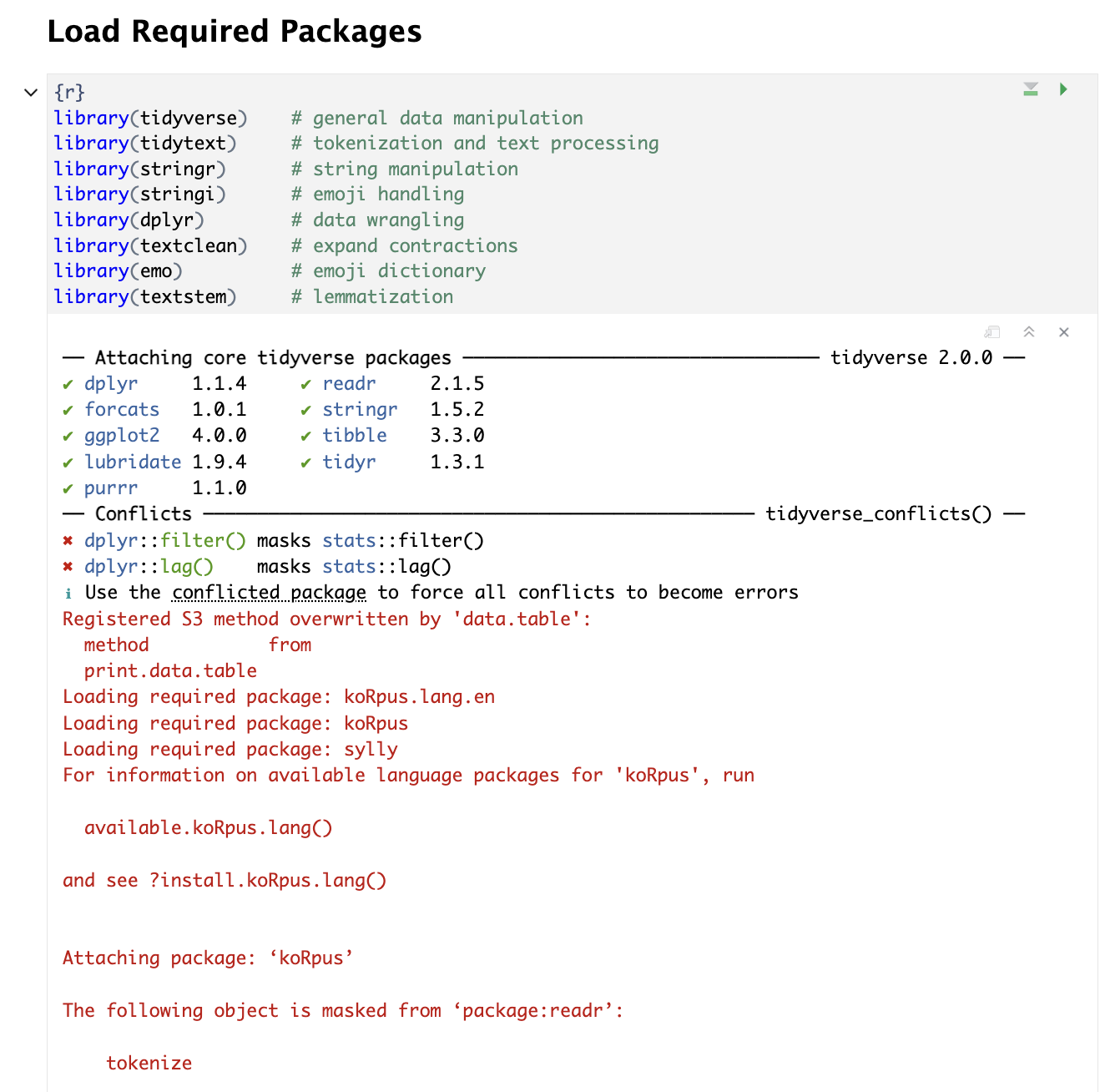

Let’s start by loading all the required packages that are pre-installed in the project:

library(tidyverse) # general data manipulation

library(tidytext) # tokenization and text processing

library(stringr) # string manipulation

library(stringi) # emoji handling

library(dplyr) # data wrangling

library(textclean) # expand contractions

library(emo) # emoji dictionary

library(textstem) # lemmatizationAfter running it, you should get:

Alright! With all the necessary packages loaded, let’s take a look at the dataset we’ll be working with:

# Inspecting the data

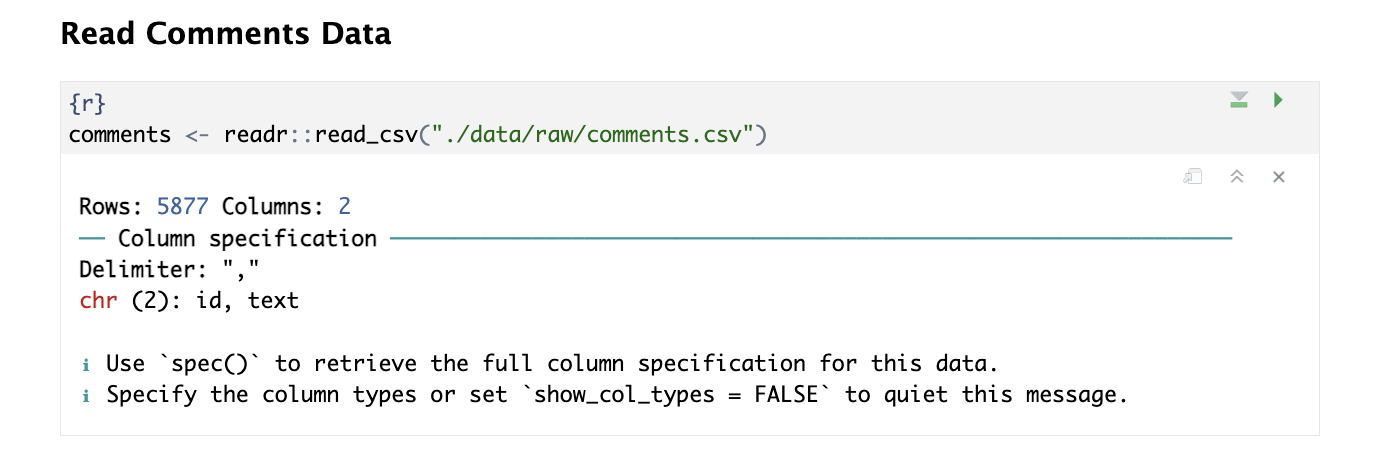

comments <- readr::read_csv("./data/raw/comments.csv")Which should show our dataset contains 5877 comments and two columns and display the comments dataset to our environment:

In the workbook, you’ll notice that we’ve pre-populated some chunks below to save you from the tedious typing. Don’t worry about them for now, we’ll come back to them shortly.